前言

一个摄像头视野不大的时候,我们希望进行两个视野合并,这样让正视的视野增大,从而可以看到更广阔的标准视野。拼接的方法分为两条路,第一条路是Sticher类,第二条思路是特征点匹配。

本篇使用特征点匹配,进行两张图来视野合并拼接。

Demo

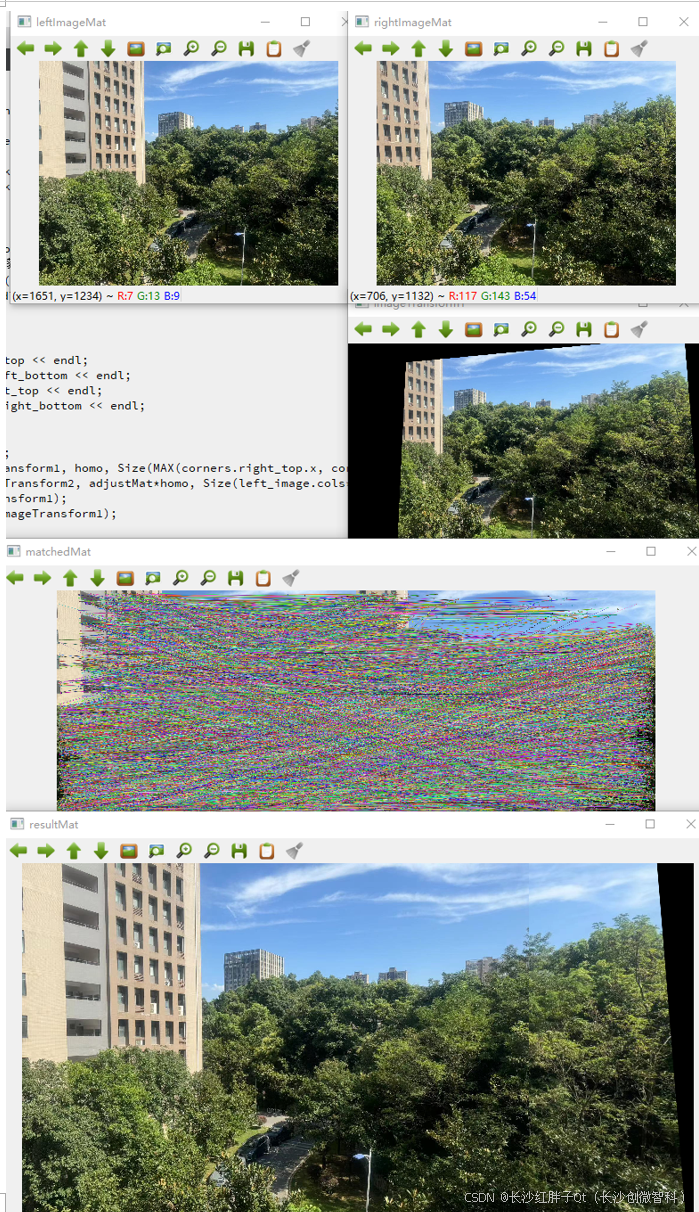

100%的点匹配

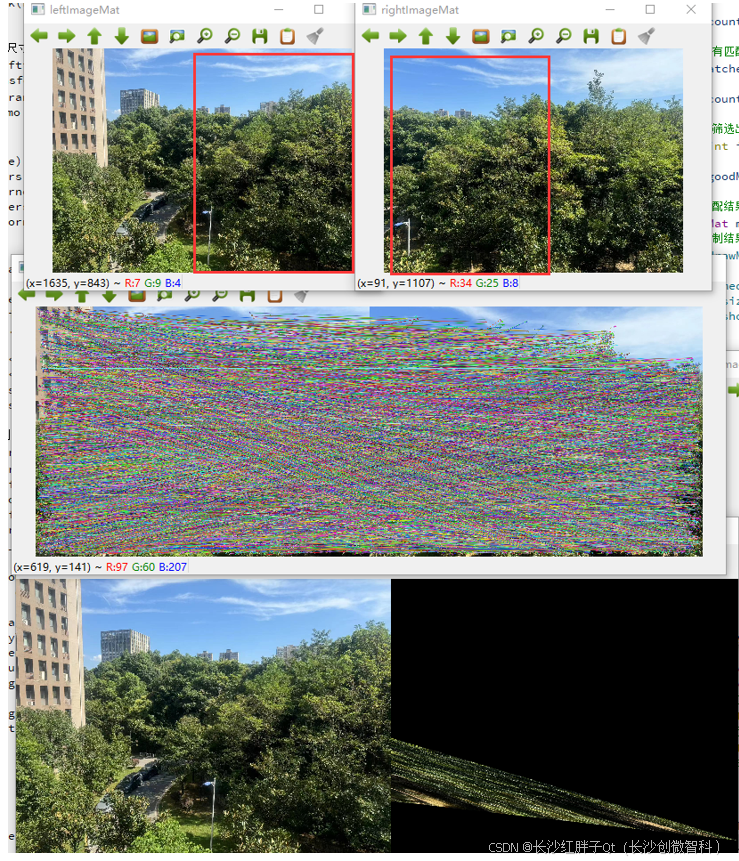

换了一幅图:

所以,opencv传统的方式,对这些特征点是有一些要求的。(注意:这两张图使用sStitcher类实现全景图片拼接,是无法拼接成功的!!!)

两张图的拼接过程

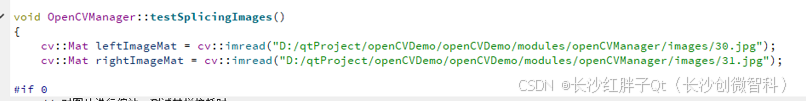

步骤一:打开图片

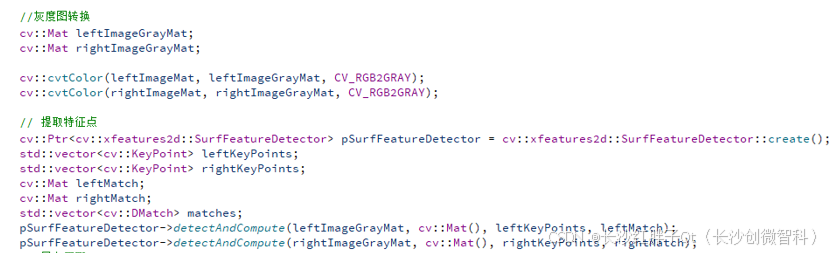

cv::Mat leftImageMat = cv::imread("D:/qtProject/openCVDemo/openCVDemo/modules/openCVManager/images/30.jpg"); cv::Mat rightImageMat = cv::imread("D:/qtProject/openCVDemo/openCVDemo/modules/openCVManager/images/31.jpg"); 步骤二:提取特征点

// 提取特征点 cv::Ptr<cv::xfeatures2d::SurfFeatureDetector> pSurfFeatureDetector = cv::xfeatures2d::SurfFeatureDetector::create(); std::vector<cv::KeyPoint> leftKeyPoints; std::vector<cv::KeyPoint> rightKeyPoints; cv::Mat leftMatch; cv::Mat rightMatch; std::vector<cv::DMatch> matches; pSurfFeatureDetector->detectAndCompute(leftImageGrayMat, cv::Mat(), leftKeyPoints, leftMatch); pSurfFeatureDetector->detectAndCompute(rightImageGrayMat, cv::Mat(), rightKeyPoints, rightMatch); 步骤三:暴力匹配

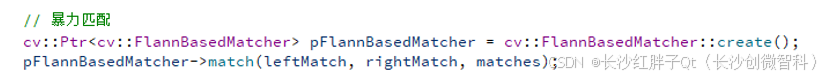

// 暴力匹配 cv::Ptr<cv::FlannBasedMatcher> pFlannBasedMatcher = cv::FlannBasedMatcher::create(); pFlannBasedMatcher->match(leftMatch, rightMatch, matches); 步骤四:提取暴力匹配后比较好的点

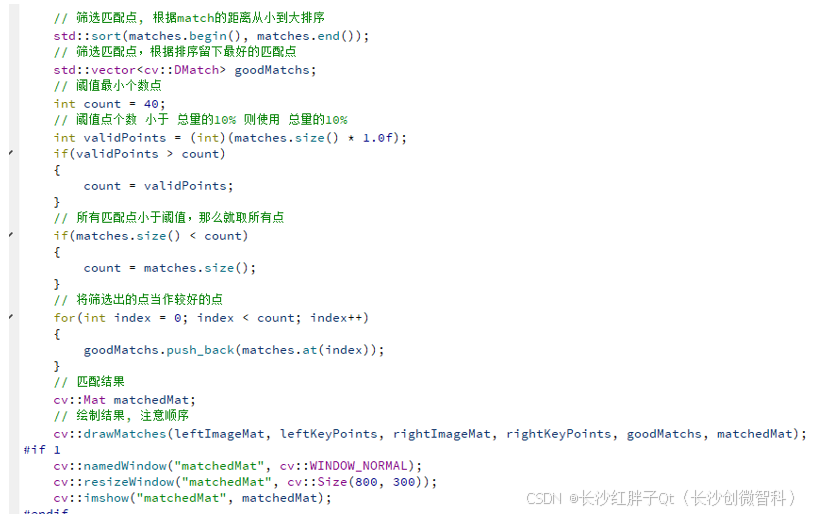

// 筛选匹配点, 根据match的距离从小到大排序 std::sort(matches.begin(), matches.end()); // 筛选匹配点,根据排序留下最好的匹配点 std::vector<cv::DMatch> goodMatchs; // 阈值最小个数点 int count = 40; // 阈值点个数 小于 总量的10% 则使用 总量的10% int validPoints = (int)(matches.size() * 1.0f); if(validPoints > count) { count = validPoints; } // 所有匹配点小于阈值,那么就取所有点 if(matches.size() < count) { count = matches.size(); } // 将筛选出的点当作较好的点 for(int index = 0; index < count; index++) { goodMatchs.push_back(matches.at(index)); } // 匹配结果 cv::Mat matchedMat; // 绘制结果, 注意顺序 cv::drawMatches(leftImageMat, leftKeyPoints, rightImageMat, rightKeyPoints, goodMatchs, matchedMat); #if 1 cv::namedWindow("matchedMat", cv::WINDOW_NORMAL); cv::resizeWindow("matchedMat", cv::Size(800, 300)); cv::imshow("matchedMat", matchedMat); #endif 步骤五:计算变换矩阵

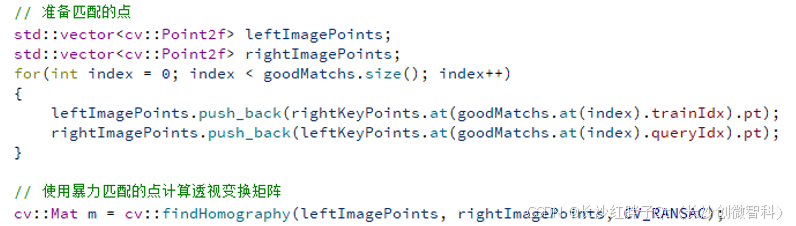

// 准备匹配的点 std::vector<cv::Point2f> leftImagePoints; std::vector<cv::Point2f> rightImagePoints; for(int index = 0; index < goodMatchs.size(); index++) { leftImagePoints.push_back(rightKeyPoints.at(goodMatchs.at(index).trainIdx).pt); rightImagePoints.push_back(leftKeyPoints.at(goodMatchs.at(index).queryIdx).pt); } // 使用暴力匹配的点计算透视变换矩阵 cv::Mat m = cv::findHomography(leftImagePoints, rightImagePoints, CV_RANSAC); 步骤六:计算第二张图变换后的图像的大小

// 计算第二张图的变换大小 cv::Point2f leftTopPoint2f; cv::Point2f leftBottomPoint2f; cv::Point2f rightTopPoint2f; cv::Point2f rightBottomPoint2f; cv::Mat H = m.clone(); cv::Mat src = leftImageMat.clone(); { cv::Mat V1; cv::Mat V2; // 左上角(0, 0, 1) double v2[3] = {0, 0, 1}; // 变换后的坐标值 double v1[3]; //列向量 V2 = cv::Mat(3, 1, CV_64FC1, v2); V1 = cv::Mat(3, 1, CV_64FC1, v1); V1 = H * V2; leftTopPoint2f.x = v1[0] / v1[2]; leftTopPoint2f.y = v1[1] / v1[2]; // 左下角(0, src.rows, 1) v2[0] = 0; v2[1] = src.rows; v2[2] = 1; V2 = cv::Mat(3, 1, CV_64FC1, v2); V1 = cv::Mat(3, 1, CV_64FC1, v1); V1 = H * V2; leftBottomPoint2f.x = v1[0] / v1[2]; leftBottomPoint2f.y = v1[1] / v1[2]; // 右上角(src.cols, 0, 1) v2[0] = src.cols; v2[1] = 0; v2[2] = 1; V2 = cv::Mat(3, 1, CV_64FC1, v2); V1 = cv::Mat(3, 1, CV_64FC1, v1); V1 = H * V2; rightTopPoint2f.x = v1[0] / v1[2]; rightTopPoint2f.y = v1[1] / v1[2]; // 右下角(src.cols,src.rows,1) v2[0] = src.cols; v2[1] = src.rows; v2[2] = 1; V2 = cv::Mat