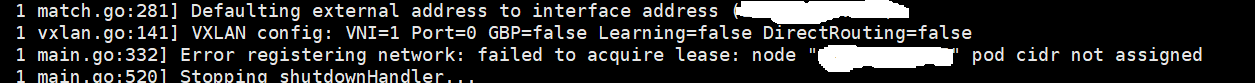

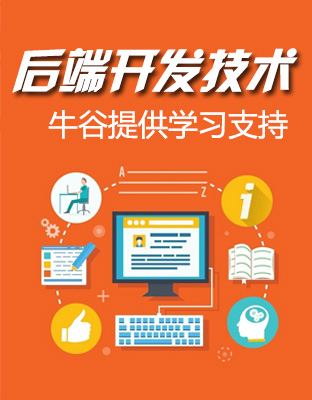

问题现象

CNI 状态 CrashLookBackOff

问题原因

默认环境下 k8s 每个 node 的CNI subnet 子网是一个 24位掩码的子网。当集群总结点数超过 255 个将导致 CNI 子网不足。我们有两种方式结局

解决方案

init 集群指定 kube-controller-manager 参数

apiVersion: kubeadm.k8s.io/v1beta3

kind: InitConfiguration

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

localAPIEndpoint:

advertiseAddress: 192.168.10.11

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

imagePullPolicy: IfNotPresent

taints: null

---

apiVersion: kubeadm.k8s.io/v1beta3

kind: ClusterConfiguration

apiServer:

certSANs:

- master1

- 192.168.10.11

timeoutForControlPlane: 4m0s

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 192.168.10.10:6443

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

controllerManager:

extraArgs: # 这里指定每个节点使用 26 位掩码

"node-cidr-mask-size": "26"

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kubernetesVersion: 1.23.0

networking:

podSubnet: 172.244.0.0/16

serviceSubnet: 172.66.0.0/12

nodeCidrMaskSize: 26

scheduler: {}

---

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

cgroupDriver: cgroupfs

~

针对已建成集群,需要解决这个问题

修改 /etc/kubernetes/manifests/kube-controller-manager.yaml 中 spec.containers.[0].command 中的参数

....

spec:

containers:

- command:

- kube-controller-manager

- --allocate-node-cidrs=true

- --authentication-kubeconfig=/etc/kubernetes/controller-manager.conf

- --authorization-kubeconfig=/etc/kubernetes/controller-manager.conf

- --bind-address=127.0.0.1

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --cluster-cidr=172.244.0.0/16

- --cluster-name=kubernetes

- --cluster-signing-cert-file=/etc/kubernetes/pki/ca.crt

- --cluster-signing-key-file=/etc/kubernetes/pki/ca.key

- --controllers=*,bootstrapsigner,tokencleaner

- --kubeconfig=/etc/kubernetes/controller-manager.conf

- --leader-elect=true

- --node-cidr-mask-size=26 # 添加启动参数

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

- --root-ca-file=/etc/kubernetes/pki/ca.crt

- --service-account-private-key-file=/etc/kubernetes/pki/sa.key

- --service-cluster-ip-range=172.66.0.0/12

- --use-service-account-credentials=true

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.23.0

...

重启 master 节点 kube-controller-manager

对于已经加入集群节点,只需要重新加入集群节点掩码就会变成 26 位

没有回复内容